Step-1: Install dependencies

sudo apt-get update && sudo apt-get -y upgrade

sudo apt install zlib1g-dev build-essential libssl-dev libreadline-dev

sudo apt install libyaml-dev libsqlite3-dev sqlite3 libxml2-dev

sudo apt install libxslt1-dev libcurl4-openssl-dev

sudo apt install software-properties-common libffi-dev nodejs

sudo apt install git

sudo apt install nginx

sudo apt install autoconf bison build-essential libssl-dev libyaml-dev libreadline6-dev zlib1g-dev libncurses5-dev libffi-dev libgdbm-dev libsqlite3-dev

Step-2: Setup Rbenv

git clone https://github.com/rbenv/rbenv.git ~/.rbenv

echo 'export PATH="$HOME/.rbenv/bin:$PATH"' >> ~/.bashrc

echo 'eval "$(~/.rbenv/bin/rbenv init - bash)"' >> ~/.bashrc

echo 'eval "$(rbenv init -)"' >> ~/.bashrc

source ~/.bashrc

rbenv init

type rbenv

Step-3: Setup Ruby Build

git clone https://github.com/rbenv/ruby-build.git "$(rbenv root)"/plugins/ruby-build

Step-4: Install Ruby

RUBY_CONFIGURE_OPTS=--disable-install-doc rbenv install 2.7.5

rbenv rehash

echo "gem: --no-document" > ~/.gemrc

Step-5: Install Rails

gem install rails

Step-6: Install Puma

gem install puma

Step-7: Install Bundler

gem install bundler -v 2.1.4 --no-ri --no-rdoc

// or

gem install bundler --no-ri --no-rdoc

Step-8: Install Node-JS and Yarn

sudo apt-get install nodejs

sudo apt install yarn

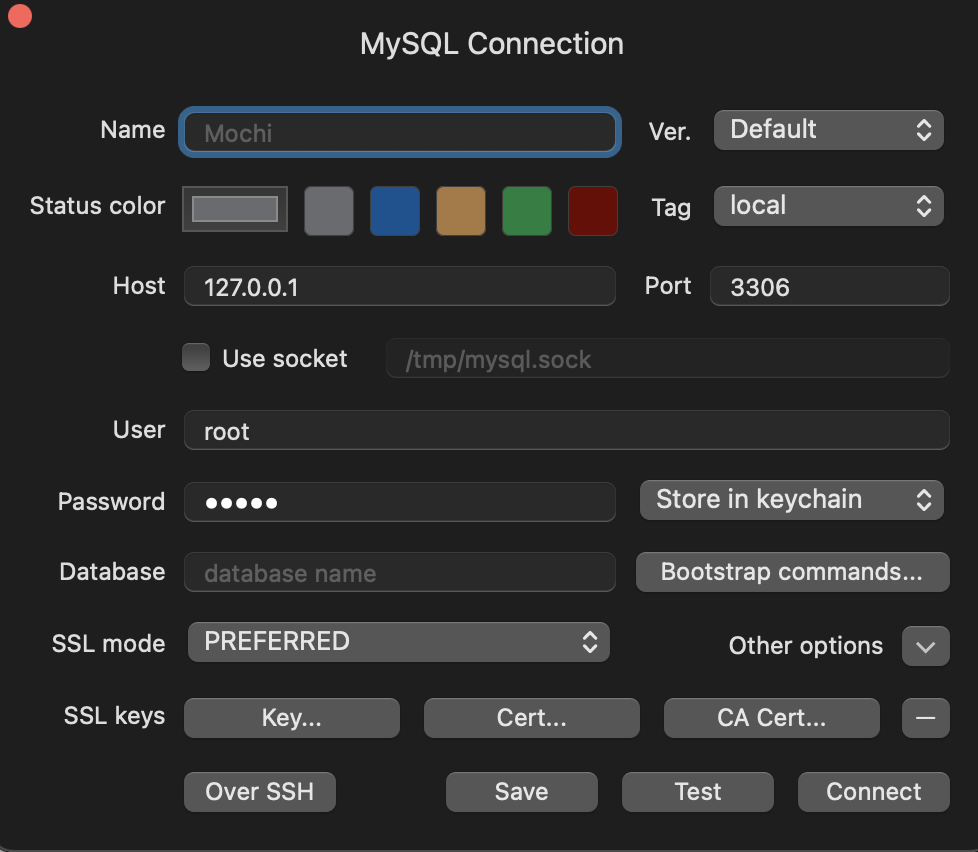

Step-9: Install MySQL Dependencies

sudo apt-get install libmysqlclient-dev

gem install mysql2 -v '0.5.0' --source 'https://rubygems.org/’

Step-10: Copy SSH public key to Gitlab Repo

ssh-keygen -o -t rsa -b 4096 -C "[email protected]"

setelah create ssh, copy ssh public ke Gitlab repo

lalu adjust permission folder di ubuntu server

chmod o+x $HOME

Step-11: Add capistrano dependencies into Gemfile

group :development do

gem "web-console"

gem 'capistrano'

gem 'capistrano-rails'

gem 'capistrano-rbenv'

gem 'capistrano-sidekiq'

gem 'capistrano-bundler'

gem 'capistrano3-puma'

end

Step-12: Init capistrano

cd root-project

cap install STAGES=production

Step-13: Write config file app/shared/config/database.yml

default: &default

adapter: sqlite3

pool: <%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %>

timeout: 5000

development:

<<: *default

database: db/development.sqlite3

test:

<<: *default

database: db/test.sqlite3

production:

<<: *default

database: db/production.sqlite3

Step-14: Setting puma

https://gist.github.com/arteezy/5d53d99f6ee617fae1f0db0576fdd418

sudo vim /etc/systemd/system/puma_timetable_production.service

[Unit]

Description=Puma HTTP Server for timetable (production)

After=network.target

[Service]

Type=simple

User=ubuntu

WorkingDirectory=/home/ubuntu/timetable/current

ExecStart=/home/ubuntu/.rbenv/bin/rbenv exec bundle exec --keep-file-descriptors puma -C /home/ubuntu/timetable/shared/puma.rb

ExecReload=/bin/kill -USR1 $MAINPID

StandardOutput=append:/home/ubuntu/timetable/current/log/puma.access.log

StandardError=append:/home/ubuntu/timetable/current/log/puma.error.log

Restart=always

RestartSec=1

SyslogIdentifier=puma

[Install]

WantedBy=multi-user.target

sudo systemctl daemon-reload

sudo systemctl start puma_timetable_production.service

sudo systemctl restart puma_timetable_production.service

sudo systemctl status puma_timetable_production.service

sudo systemctl enable puma_timetable_production.service

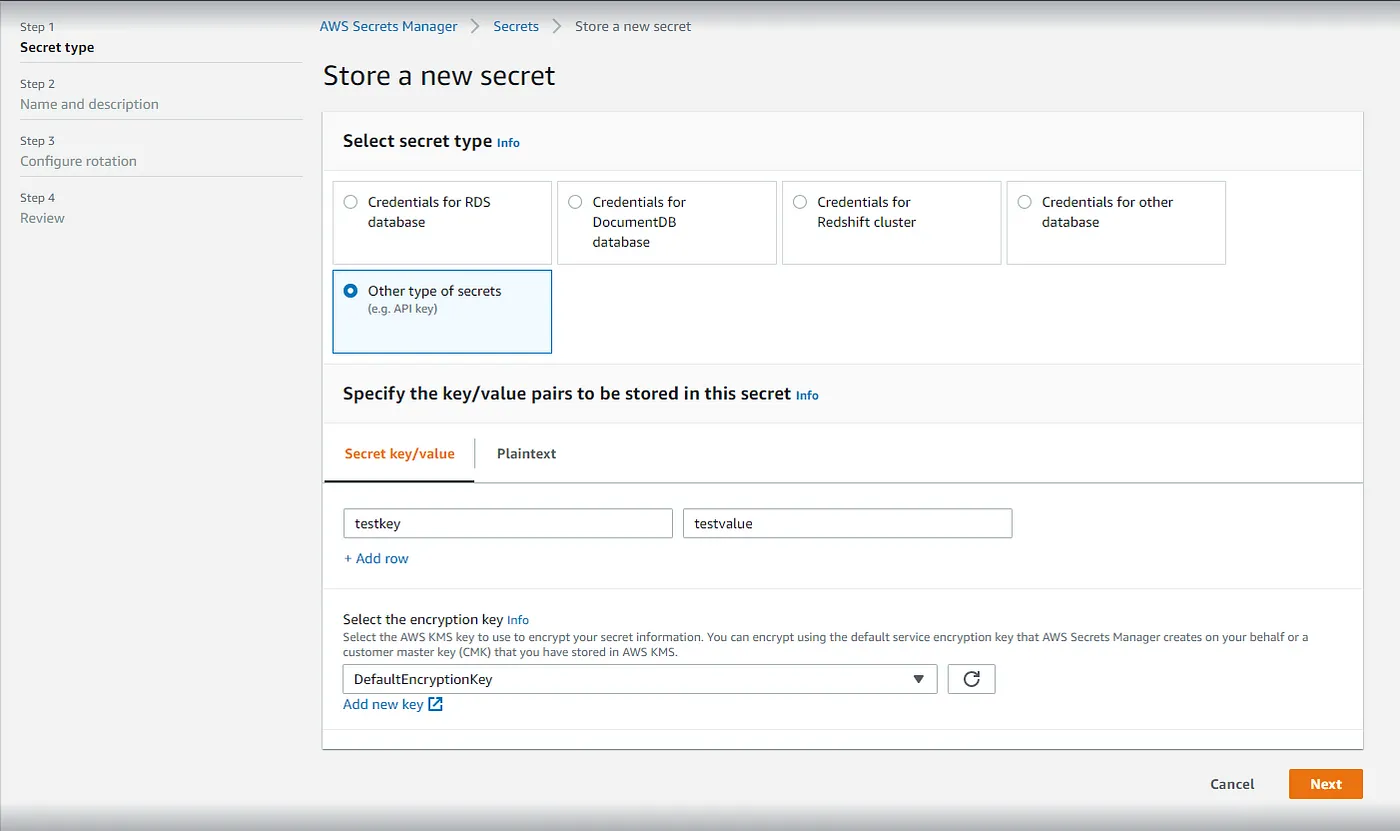

Step-15: Set secret key

run rake secret in your local machine and this will generate a key for you

make config/secrets.yml file

add the generated secret key here

production:

secret_key_base: asdja1234sdbjah1234sdbjhasdbj1234ahds…

and redeploy the application after commiting.

Step-16: Setting NGINX

nginx.conf

upstream timetable_app {

server unix:/home/ubuntu/timetable/shared/tmp/sockets/puma.sock fail_timeout=0;

}

server {

listen 80;

server_name _;

root /home/ubuntu/timetable/current/public;

location / {

try_files $uri/index.html $uri @timetable_app;

}

location @timetable_app {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_redirect off;

proxy_pass http://timetable_app;

}

error_page 500 502 503 504 /500.html;

client_max_body_size 4G;

keepalive_timeout 10;

}